|

Getting your Trinity Audio player ready...

|

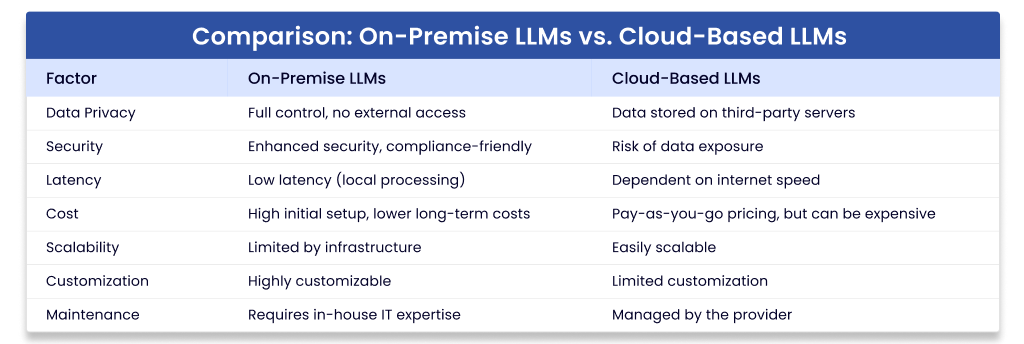

Large Language Models (LLMs) are revolutionizing the artificial intelligence (AI) landscape, reshaping how enterprises interact with data, automate workflows, and improve decision-making. As AI adoption accelerates, LLMs are at the forefront of this transformation. However, many enterprises remain cautious about full integration due to concerns over cost, security, and regulatory compliance.

The prevalent approach to LLM adoption relies heavily on cloud-based solutions provided by major vendors. While these platforms offer ease of access and rapid deployment, they come with inherent limitations—ranging from high costs and latency to security vulnerabilities and lack of control. This has led many organizations to explore the benefits of deploying LLMs on-premise, enabling them to leverage AI while maintaining full control over their infrastructure and data.

In this article, we explore why enterprises should consider on-premise LLMs, how to deploy them effectively, and who stands to benefit the most from this approach.

Why Deploy LLMs On-Premise?

1. Enhanced Data Security & Privacy

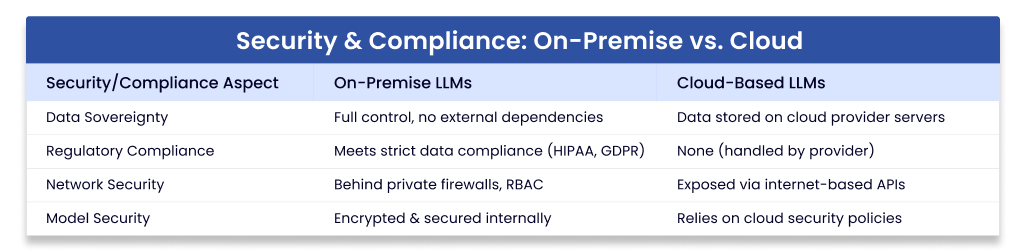

Data security is one of the most pressing concerns in AI adoption. Industries such as finance, healthcare, and government handle vast amounts of sensitive information that must remain within secure environments to comply with strict regulations. On-premise LLMs ensure that confidential data never leaves an organization's controlled infrastructure, reducing exposure to data breaches, leaks, or unauthorized access.

2. Regulatory & Compliance Requirements

Many industries are subject to stringent regulatory mandates regarding data residency and processing. For instance, financial institutions must comply with GDPR, HIPAA, and other data governance laws that often require data to be stored within specific geographical regions or private infrastructures. On-premise LLM deployment enables companies to remain compliant while leveraging AI capabilities without external dependencies.

3. Customization & Fine-Tuning for Specific Use Cases

Cloud-based LLMs are typically trained for general-purpose tasks, often resulting in suboptimal performance for industry-specific applications. On-premise deployment allows organizations to fine-tune models on proprietary datasets, ensuring better accuracy, relevance, and efficiency. By customizing LLMs for niche applications, businesses can gain a competitive advantage while optimizing costs.

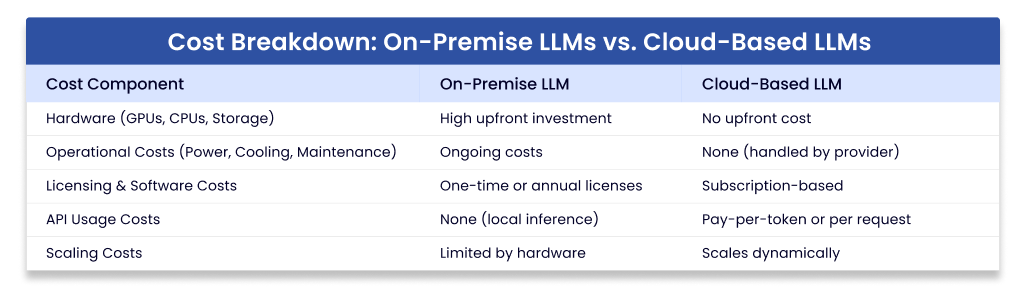

4. Lower Operational Costs Over Time

Cloud-based LLM solutions operate on a pay-as-you-go model, making them appealing for initial adoption. However, as usage scales, costs can rise exponentially—especially for high-volume applications like document summarization, customer service automation, and large-scale text analysis. Investing in on-premise AI infrastructure can offer substantial long-term savings by eliminating recurring API costs and providing predictable operational expenses.

5. Performance & Latency Optimization

Real-time AI applications, such as chatbots, automated assistants, and decision-support systems, demand low latency and high-speed processing. Cloud-based LLMs often struggle with response times due to network dependencies and shared resources. On-premise deployments eliminate these bottlenecks, enabling enterprises to achieve predictable, high-speed performance tailored to their operational needs.

How to Deploy LLMs On-Premise

1. Infrastructure Setup: Choosing the Right Hardware

Deploying an on-premise LLM requires high-performance computing (HPC) infrastructure capable of handling intensive processing workloads. Key hardware components include:

- GPUs/TPUs: High-end accelerators (e.g., NVIDIA A100, H100, AMD MI300X) for model training and inference.

- Storage: Fast SSDs/NVMe for rapid data retrieval and model execution.

- Networking: High-speed connectivity to facilitate distributed computing.

- Scalability: Modular architecture to support future growth and additional AI workloads.

2. Selecting the right LLMs

Organizations can either build their LLMs from scratch or leverage open-source pre-trained models such as:

- LLaMA (Meta AI)

- Falcon (TII Abu Dhabi)

- Mistral & Mixtral (Open-source fine-tuned models)

- GPT-4 (via on-prem solutions like Azure AI private deployments)

- Custom-trained models based on domain-specific datasets

3. Fine-Tuning & Optimization

Once an appropriate model is selected, it must be fine-tuned to optimize performance for the organization’s use cases. Techniques include:

- Transfer learning: Adapting pre-trained models to new tasks.

- LoRA (Low-Rank Adaptation): Efficient parameter tuning to reduce computational costs.

- Distillation: Reducing model size while maintaining performance.

- Quantization: Optimizing memory usage and inference speed.

4. Deployment & Integration

After fine-tuning, the model is deployed within the enterprise infrastructure using:

- Kubernetes & Docker for containerized scaling and orchestration.

- ONNX & TensorRT for optimized inference.

- API gateways for seamless integration with enterprise applications.

5. Ongoing Monitoring & Maintenance

AI models require continuous monitoring and updates to maintain accuracy and relevance. Key aspects include:

- Performance benchmarking to detect degradation.

- Security audits to identify vulnerabilities.

- Regular retraining using new datasets to adapt to evolving business needs.

Who Benefits from On-Premise LLMs?

1. Enterprises with Sensitive Data

Industries such as finance, healthcare, and defense prioritize data security and compliance. On-prem LLMs offer them the ability to harness AI capabilities without compromising regulatory obligations or risking data exposure.

2. Companies with High AI Compute Needs

Organizations that run large-scale AI workloads—such as legal firms processing vast legal documents, research institutions analyzing scientific literature, and media companies managing content archives—can benefit from the cost efficiency and scalability of on-premise AI.

3. Businesses Seeking Competitive Differentiation

Companies looking to develop proprietary AI solutions tailored to unique industry challenges can use on-premise LLMs to build specialized applications, reducing dependency on third-party vendors and achieving superior performance at lower costs.

4. Government & Public Sector Agencies

Government institutions often have strict policies regarding data storage and AI deployment. On-premise Large Language Models help them maintain sovereignty over sensitive data while enabling AI-powered analytics and automation.

5. AI Research & Development Teams

R&D teams benefit from full access to model internals, enabling deeper experimentation, custom fine-tuning, and innovation without restrictions imposed by cloud vendors.

Conclusion

On-premise deployment of Large Language Models presents a compelling alternative to cloud-based solutions, offering unmatched security, compliance, customization, cost control, and performance benefits. While cloud-based Large Language Models may be suitable for initial adoption, enterprises seeking long-term AI integration and differentiation should strongly consider an on-premise approach.

By investing in robust AI infrastructure, fine-tuning models for specific applications, and maintaining full control over data processing, organizations can unlock the full potential of AI—driving innovation, efficiency, and competitive advantage in an increasingly AI-driven world.

For enterprises looking to embark on their on-premise AI journey, partnering with experienced AI/ML solution providers can accelerate deployment, ensuring optimal performance and maximum value from their AI investments.